I’ve been scrolling by X (previously Twitter) currently, and actually, the doomsday predictions have gotten exhausting. You understand those: “AI will substitute us by Tuesday,” or “The algorithms are about to achieve consciousness and delete humanity.” It makes for excellent sci-fi film plots, however as somebody who lives and breathes tech daily, it typically feels disconnected from the truth of the code operating on our servers.

That’s the reason I breathed a sigh of reduction this week. Jensen Huang, the CEO of Nvidia—the person virtually powering this complete AI revolution together with his chips—stepped as much as the microphone and basically mentioned: “Relax.”

When the individual promoting the shovels for the gold rush tells you there isn’t a magic genie within the mine, you hear. Huang’s current feedback concerning the impossibility of “God-like AI” are a crucial splash of chilly water on a hearth of hype that has been burning just a little too shiny.

Right here is my deep dive into what Nvidia is absolutely saying, why “AI Concern” is definitely hurting us, and why the longer term is about instruments, not overlords.

Deconstructing the “God-Like” AI Fantasy

First, let’s handle the elephant within the server room. There’s a time period floating round Silicon Valley: AGI (Synthetic Normal Intelligence). In its most excessive definition, individuals name this “God-like AI”—a system that is aware of the whole lot, understands physics completely, and might motive higher than any human in each potential discipline.

Jensen Huang isn’t shopping for it.

He argues that the thought of a machine possessing whole competence throughout all domains—understanding the nuances of human language, the complexity of molecular buildings, and the legal guidelines of theoretical physics unexpectedly—is just not potential with right this moment’s expertise.

Why We Aren’t There But

I believe it is very important bear in mind what Giant Language Fashions (LLMs) truly are. They’re prediction engines. They’re extremely good at guessing the following phrase in a sentence based mostly on likelihood.

They don’t “know” physics: They’ll recite formulation, however they don’t perceive gravity the best way an apple (or Newton) does.They don’t “really feel” emotion: They mimic the patterns of emotional language.They lack context: A “God AI” would wish to grasp the chaotic, unwritten guidelines of the true world.

Huang identified that no researcher presently has the capability to construct a machine that understands these complexities absolutely. To cite him straight: “There is no such thing as a such AI.”

My Take: I discover this reassuring. We regularly confuse “entry to info” with “knowledge.” Simply because an AI has learn the whole web doesn’t imply it understands what it means to be alive or can remedy issues that require instinct.

The Value of “Apocalypse Anxiousness”

Right here is the place I believe Huang hits the nail on the top. He believes that these “exaggerated AI fears” are literally damaging the tech trade and society at giant.

Once we obsess over a “Terminator” situation, two dangerous issues occur:

Misguided Regulation: Governments may rush to ban applied sciences that would truly treatment illnesses or remedy local weather change, merely out of worry of a nonexistent risk.Distracted Focus: As an alternative of fixing actual issues (like making AI hallucinates much less), builders get slowed down in philosophical debates about robotic souls.

Huang calls these “doomsday situations” unhelpful. Mixing science fiction with critical engineering doesn’t assist a startup founder repair a bug, and it doesn’t assist a physician use AI to diagnose most cancers. It simply creates noise.

The “Sci-Fi” Lure

We’ve got all been conditioned by films. We see a robotic and instantly consider The Matrix. However Huang is reminding us that we have to take a look at AI the identical manner we take a look at a dishwasher or a calculator. Is a calculator a risk to arithmetic? No, it’s a device that lets mathematicians work quicker.

A New Perspective: “AI Immigrants”

This was the a part of Huang’s discuss that basically caught with me. He used an interesting metaphor to explain the way forward for robotics and AI within the workforce: “AI Immigrants.”

He isn’t speaking about robots taking your job. He’s speaking about robots exhibiting up the place people can’t or received’t work.

In lots of components of the world, we face a large labor scarcity. Populations are ageing. There aren’t sufficient individuals to look after the aged, handle warehouses, or deal with harmful industrial duties. Huang means that AI brokers and bodily robots can act as a supplemental workforce.

The Help Function: Think about a robotic lifting heavy packing containers so a human employee doesn’t harm their again.The Effectivity Booster: Think about an AI dealing with all of the boring knowledge entry so a artistic director can give attention to design.

He views AI as a strategy to shut the hole between the work we have to do and the variety of individuals obtainable to do it. It’s not about substitute; it’s about augmentation.

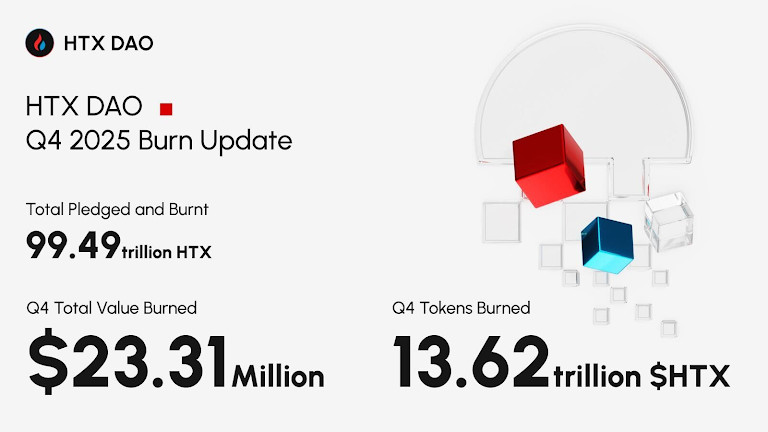

The Financial Actuality Verify (Stanford & Fortune)

To again up Huang’s pragmatic view, let’s take a look at the info. I’ve been studying current reviews from Stanford College and Fortune, and so they paint an image that may be very completely different from the hype.

Regardless of the billions of {dollars} poured into AI:

Job Market Affect: The precise disruption to job listings has been surprisingly restricted thus far.ROI (Return on Funding): Many corporations are struggling to show that AI is definitely making them extra worthwhile proper now.

We’re possible in what analysts name the “Trough of Disillusionment.” The preliminary pleasure is carrying off, and now corporations are realizing that implementing AI is tough work. It requires clear knowledge, new infrastructure, and coaching.

This aligns completely with Huang’s stance. If AI had been really “God-like,” it might have mounted the worldwide economic system in a single day. The truth that it hasn’t proves that it’s simply software program—highly effective software program, sure, however nonetheless topic to the legal guidelines of implementation and economics.

Why Centralized AI is a Unhealthy Concept

One other level Huang touched on—and one I really feel strongly about—is the hazard of centralization.

He explicitly said he’s towards the thought of a “One AI to Rule Them All.” The idea of a single, super-intelligent entity managed by one firm or one authorities is, in his phrases, “extraordinarily ineffective” (and admittedly, terrifying).

The Metaverse Planet Philosophy

Within the crypto and metaverse communities, we worth decentralization. We don’t need one mind making selections for the planet. We wish:

specialised AIs for biology,artistic AIs for artwork,logistical AIs for delivery.

We want a various ecosystem of instruments, not a digital dictator.

Whereas corporations like Meta are constructing large, nuclear-powered knowledge facilities (which is cool in its personal proper concerning infrastructure), the aim shouldn’t be to construct a god. The aim needs to be to construct higher assistants.

Last Ideas: The Path Ahead

So, the place does this depart us?

If we take heed to Jensen Huang, we should always cease checking the sky for falling robots. We should always cease treating AI like a mystic drive and begin treating it like engineering.

The “God-level” AI isn’t coming to avoid wasting us, neither is it coming to destroy us. What we have now as a substitute is a group of quickly bettering instruments that, if used responsibly, could make us extra productive, more healthy, and maybe just a little extra artistic.

I desire this actuality. It places the duty again on us. The magic isn’t within the machine; it’s in how we select to make use of it.

I’d love to listen to your tackle this: Does Jensen Huang’s assertion make you are feeling extra relaxed about the way forward for AI, or do you assume he’s downplaying the dangers to maintain promoting chips? Let’s talk about it within the feedback!